Learning Rate Schedulers#

Welcome

Welcome to the Learning Rate Schedulers tutorial. Learning rate schedulers can help us dynamically adjust the learning rate of the Adam optimization algorithm. That way, we can decrease the learning rate as we approach the minima of the cost function.

Run this notebook on Google Colab:

Find the documentation of EncoderMap:

https://ag-peter.github.io/encodermap

Goals:

In this tutorial you will learn:

Why we can profit from learning rate schedulers

How to log the current learning rate to TensorBoard

How to implement a learning rate scheduler with an exponentially decaying learning rate

For Google colab only:#

If you’re on Google colab, please uncomment these lines and install EncoderMap.

[1]:

# !wget https://gist.githubusercontent.com/kevinsawade/deda578a3c6f26640ae905a3557e4ed1/raw/b7403a37710cb881839186da96d4d117e50abf36/install_encodermap_google_colab.sh

# !sudo bash install_encodermap_google_colab.sh

If you’re on Google Colab, you also want to download the data we will use:

[2]:

# !wget https://raw.githubusercontent.com/AG-Peter/encodermap/main/tutorials/notebooks_starter/asp7.csv

Import Libraries#

Before we can start exploring the learning rate scheduler, we need to import some libraries.

[3]:

import os

import numpy as np

import encodermap as em

import tensorflow as tf

import pandas as pd

from pathlib import Path

%load_ext autoreload

%autoreload 2

/home/kevin/git/encoder_map_private/encodermap/__init__.py:194: GPUsAreDisabledWarning: EncoderMap disables the GPU per default because most tensorflow code runs with a higher compatibility when the GPU is disabled. If you want to enable GPUs manually, set the environment variable 'ENCODERMAP_ENABLE_GPU' to 'True' before importing EncoderMap. To do this in python you can run:

import os; os.environ['ENCODERMAP_ENABLE_GPU'] = 'True'

before importing encodermap.

_warnings.warn(

We wil work in the directory runs/lr_scheduler. We will create it now.

[4]:

(Path.cwd() / "runs/lr_scheduler").mkdir(parents=True, exist_ok=True)

Why learning rate schedulers? A linear regression example#

[ ]:

Log the current learning rate to Tensorboard#

Before we implement some dynamic learning rates we want to find a way to log the learning rate to tensorboard.

Running tensorboard on Google colab#

To use tensorboard in google colabs notebooks, you neet to first load the tensorboard extension

%load_ext tensorboard

And then activate it with:

%tensorboard --logdir .

The next code cell contains these commands. Uncomment them and then continue.

Running tensorboard locally#

TensorBoard is a visualization tool from the machine learning library TensorFlow which is used by the EncoderMap package. During the dimensionality reduction step, when the neural network autoencoder is trained, several readings are saved in a TensorBoard format. All output files are saved to the path defined in parameters.main_path. Navigate to this location in a shell and start TensorBoard. Change the paramter Tensorboard to True to make Encodermap log to Tensorboard.

In case you run this tutorial in the provided Docker container you can open a new console inside the container by typing the following command in a new system shell.

docker exec -it emap bash

Navigate to the location where all the runs are saved. e.g.:

cd notebooks_easy/runs/asp7/

Start TensorBoard in this directory with:

tensorboard --logdir .

0.0.0.0:6006 or 127.0.0.1:6006In the SCALARS tab of TensorBoard you should see among other values the overall cost and different contributions to the cost. The two most important contributions are auto_cost and distance_cost. auto_cost indicates differences between the inputs and outputs of the autoencoder. distance_cost is the part of the cost function which compares pairwise distances in the input space and the low-dimensional (latent) space.

Fixing Reloading issues Using Tensorboard we often encountered some issues while training multiple models and writing mutliple runs to Tensorboard’s logdir. Reloading the data and event refreshing the web page did not display the data of the current run. We needed to kill tensorboard and restart it in order to see the new data. This issue was fixed by setting reload_multifile True.

tensorboard --logdir . --reload_multifile True

When you’re on Goole Colab, you can load the Tensorboard extension with:

[5]:

# %load_ext tensorboard

# %tensorboard --logdir .

Sublcassing EncoderMap’s EncoderMapBaseCallback#

The easiest way to implement and log a new variable to TensorBorard is by subclassing EncoderMap’s EncodeMapBaseCallback from the callbacks submodule.

[6]:

?em.callbacks.EncoderMapBaseCallback

As per the docstring of the EncoderMapBaseCallback class, we create the LearningRateLogger class and implement a piece of code in the on_summary_step method.

[7]:

class LearningRateLogger(em.callbacks.EncoderMapBaseCallback):

def on_summary_step(self, step, logs=None):

with tf.name_scope("Learning Rate"):

tf.summary.scalar('current learning rate', self.model.optimizer.lr, step=step)

We can now create an EncoderMap class and add our new callback with the add_callback method.

[8]:

df = pd.read_csv('asp7.csv')

dihedrals = df.iloc[:,:-1].values.astype(np.float32)

cluster_ids = df.iloc[:,-1].values

parameters = em.Parameters(

tensorboard=True,

periodicity=2*np.pi,

main_path=em.misc.run_path('runs/lr_scheduler'),

n_steps=100,

summary_step=5

)

# create an instance of EncoderMap

e_map = em.EncoderMap(parameters, dihedrals)

# Add an instance of the new Callback

e_map.add_callback(LearningRateLogger)

Output files are saved to runs/lr_scheduler/run0 as defined in 'main_path' in the parameters.

Saved a text-summary of the model and an image in runs/lr_scheduler/run0, as specified in 'main_path' in the parameters.

We train the Model.

[9]:

history = e_map.train()

100%|█████████████████████████| 100/100 [00:03<00:00, 26.30it/s, Loss after step 100=31.4]

Saving the model to runs/lr_scheduler/run0/saved_model_2024-12-29T13:56:23+01:00.keras. Use `em.EncoderMap.from_checkpoint('runs/lr_scheduler/run0')` to load the most recent model, or `em.EncoderMap.from_checkpoint('runs/lr_scheduler/run0/saved_model_2024-12-29T13:56:23+01:00.keras')` to load the model with specific weights..

This model has a subclassed encoder, which can be loaded independently. Use `tf.keras.load_model('runs/lr_scheduler/run0/saved_model_2024-12-29T13:56:23+01:00_encoder.keras')` to load only this model.

This model has a subclassed decoder, which can be loaded independently. Use `tf.keras.load_model('runs/lr_scheduler/run0/saved_model_2024-12-29T13:56:23+01:00_decoder.keras')` to load only this model.

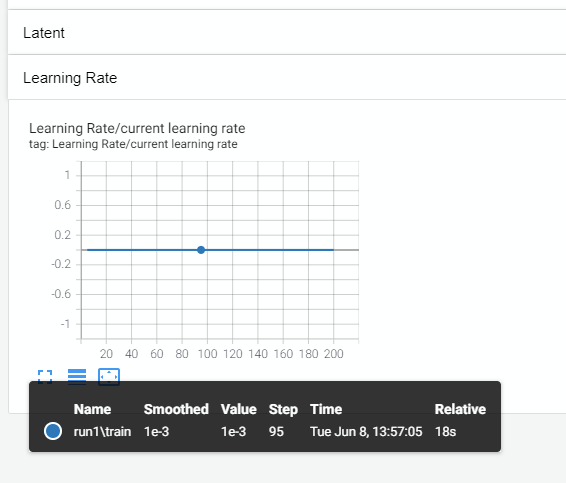

And now, we can see our current leanring rate in TensorBoard

A constant learning rate of 0.001

Write a learning rate scheduler#

We can write a learning rate scheduler either by providing intervals of training steps and the associated learning rate:

def lr_schedule(step):

"""

Returns a custom learning rate that decreases as steps progress.

"""

learning_rate = 0.2

if step > 10:

learning_rate = 0.02

if step > 20:

learning_rate = 0.01

if step > 50:

learning_rate = 0.005

Or by using a function that gives us a learning rate:

def scheduler(step, lr=1, n_steps=1000):

"""

Returns a custom learning rate that decreases based on an exp function as steps progress.

"""

if step < 10:

return lr

else:

return lr * tf.math.exp(-step / n_steps)

Below, is an example combining both:

[10]:

def scheduler(step, lr=1):

"""

Returns a custom learning rate that decreases based on an exp function as steps progress.

"""

if step < 10:

return lr

else:

return lr * tf.math.exp(-0.1)

This scheduler function can simply be provided to the builtin keras.callbacks.LearningRateScheduler callback.

[11]:

callback = tf.keras.callbacks.LearningRateScheduler(scheduler)

And appended to the list of callbacks in the EncoderMap class.

[12]:

parameters = em.Parameters(

tensorboard=True,

periodicity=2*np.pi,

main_path=em.misc.run_path('runs/lr_scheduler'),

n_steps=50,

summary_step=1

)

e_map = em.EncoderMap(parameters, dihedrals)

e_map.add_callback(LearningRateLogger)

e_map.add_callback(callback)

Output files are saved to runs/lr_scheduler/run1 as defined in 'main_path' in the parameters.

Saved a text-summary of the model and an image in runs/lr_scheduler/run1, as specified in 'main_path' in the parameters.

[13]:

history = e_map.train()

100%|████████████████████████████| 50/50 [00:03<00:00, 15.64it/s, Loss after step 50=38.3]

Saving the model to runs/lr_scheduler/run1/saved_model_2024-12-29T13:56:26+01:00.keras. Use `em.EncoderMap.from_checkpoint('runs/lr_scheduler/run1')` to load the most recent model, or `em.EncoderMap.from_checkpoint('runs/lr_scheduler/run1/saved_model_2024-12-29T13:56:26+01:00.keras')` to load the model with specific weights..

This model has a subclassed encoder, which can be loaded independently. Use `tf.keras.load_model('runs/lr_scheduler/run1/saved_model_2024-12-29T13:56:26+01:00_encoder.keras')` to load only this model.

This model has a subclassed decoder, which can be loaded independently. Use `tf.keras.load_model('runs/lr_scheduler/run1/saved_model_2024-12-29T13:56:26+01:00_decoder.keras')` to load only this model.

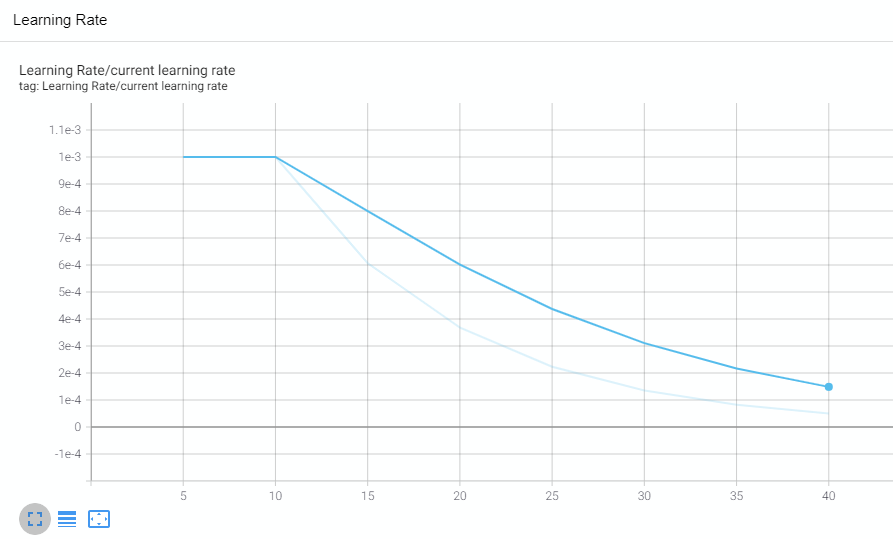

Here’s what Tensorboard should look like:

And here’s the learning rate plotted from the history.

[14]:

import plotly.express as px

px.line(history.history["lr"])

Conclusion#

Learning rate schedulers are helpful to prevent overtraining, but still slightly increase the predictive power of your NN model. EncoderMap’s modularity allows for them to be simple Plug-In solutions.