Notebook Gallery#

Here, you can find static renders of EncoderMap’s example notebooks. You can run them interactively on Google Colab or MyBinder. You can also run them on your local machine by cloning EncoderMap’s repository.

Starter Notebooks#

The starter notebooks help you with your first steps with EncoderMap.

Get started with EncoderMap

Advanced EncoderMap usage with MD data

Upload your own data and use this notebook

Notebooks intermediate#

The advanced notebooks introduce more advanced techniques and explore more novel features of EncoderMap.

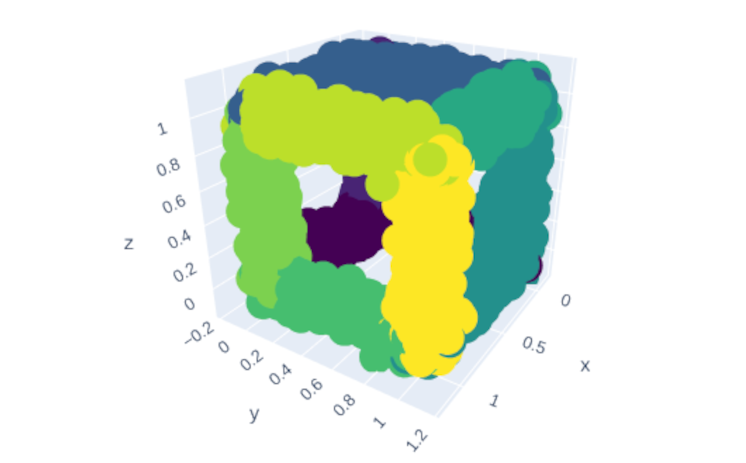

Training diverse topologies

Notebooks MD#

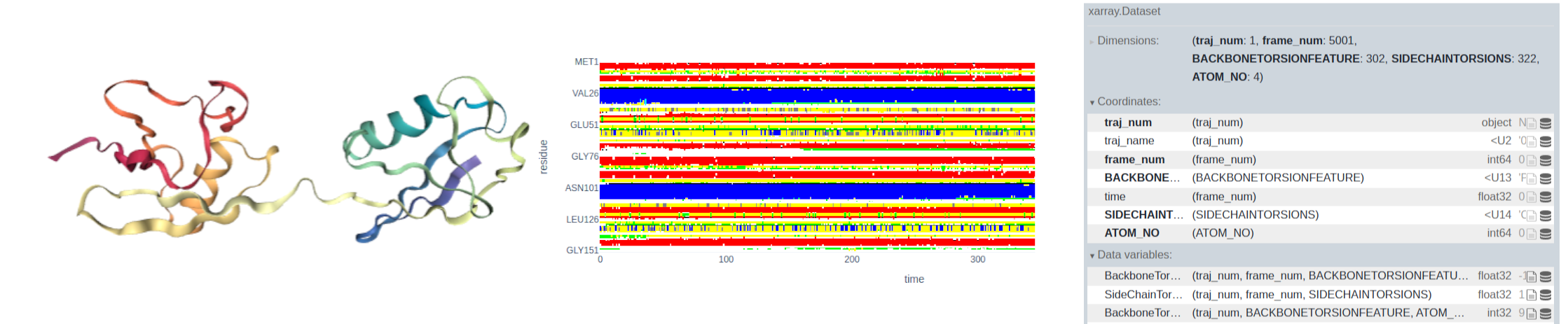

The MD notebooks contain more detailed descriptions of how EncoderMap deals with MD data. It helps you in saving and loading large MD datasets and using them to train EncoderMap. It also helps you in understanding the terms feature space and collective variable.

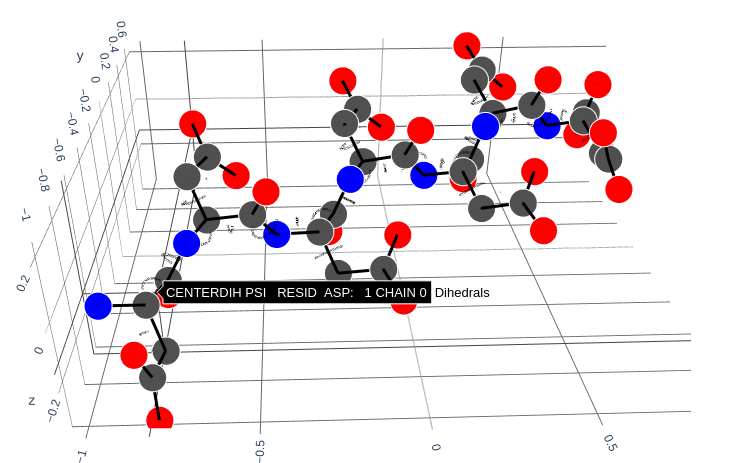

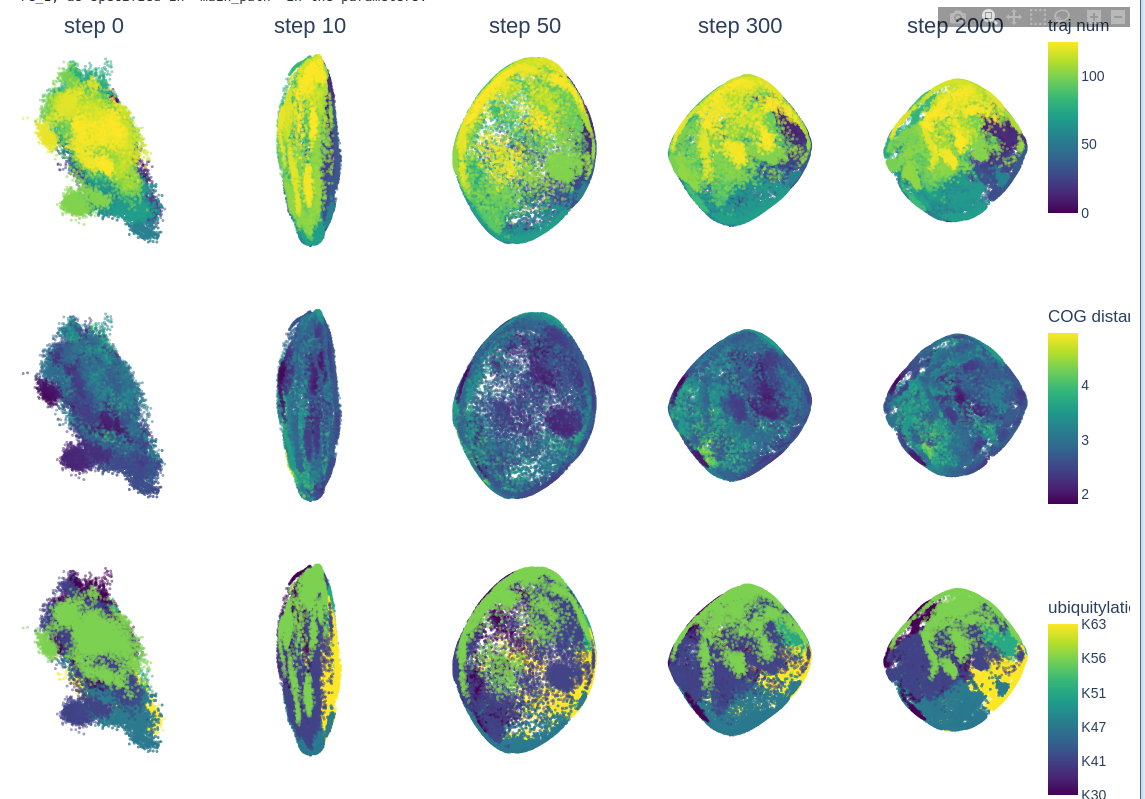

Trajectory Ensembles

Notebooks Customization#

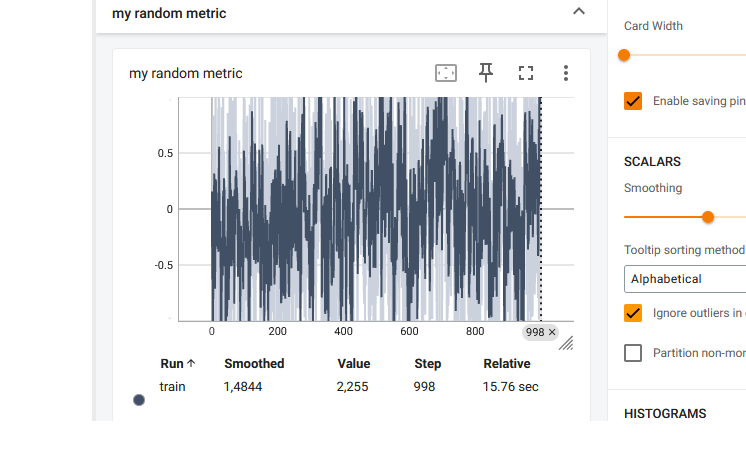

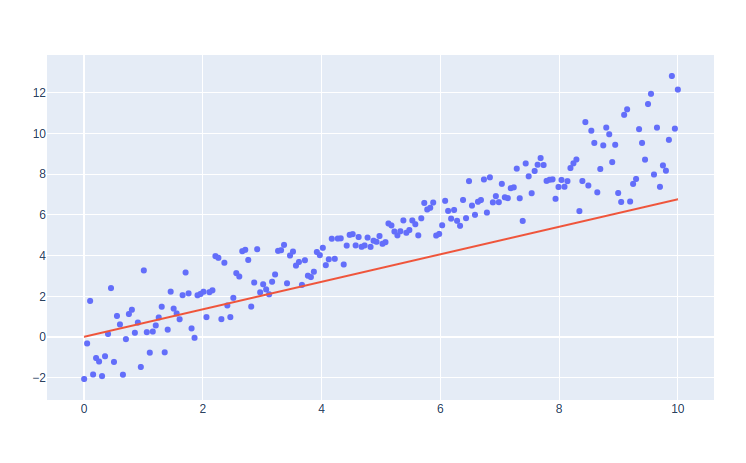

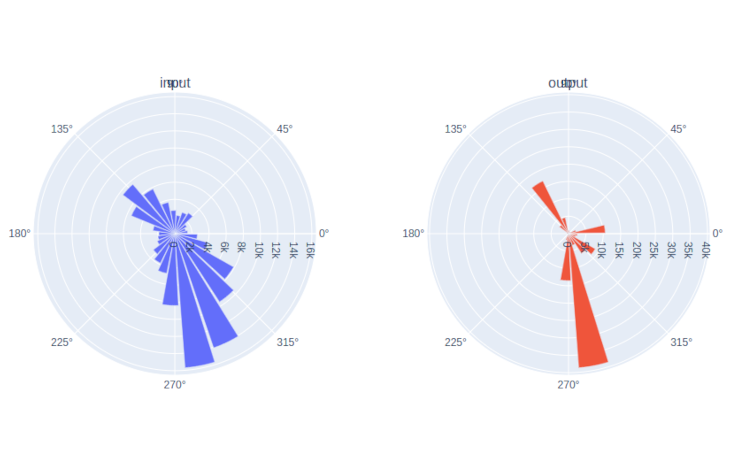

These notebooks help you in customizing EncoderMap. These tools can assist you in understanding how EncoderMap trains on your data. Furthermore, you will learn how to implement new cost functions and vary the training rate of the Neural Network.

Monitor in TensorBoard

Add new loss functions

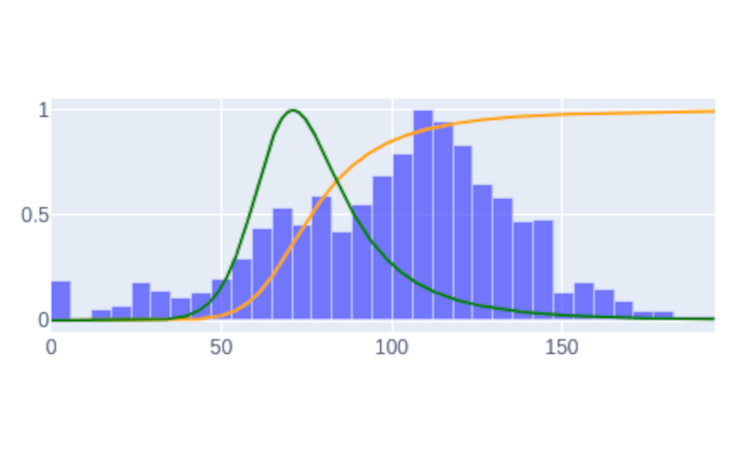

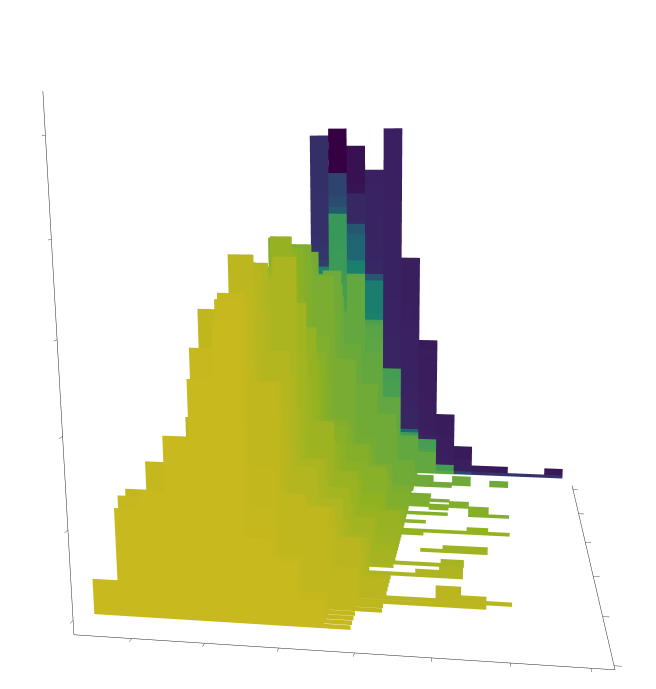

Write images to TensorBoard

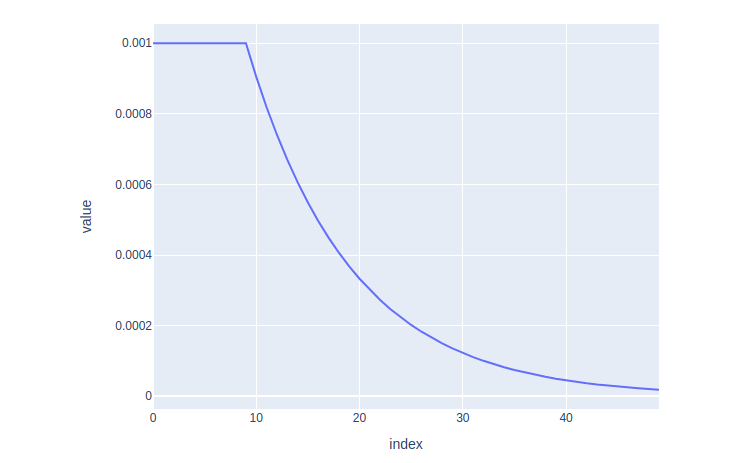

Adjust the learning rate

Notebooks Publication#

These notebooks contain the analysis code of an upcoming publication “”EncoderMap III: A dimensionality reduction package for feature exploration in molecular simulations” featuring the new version of the EncoderMap package. Trained network weights are available upon reasonable request by raising an issue on GitHub: AG-Peter/encodermap#issues, or by contacting the authors of the publication.

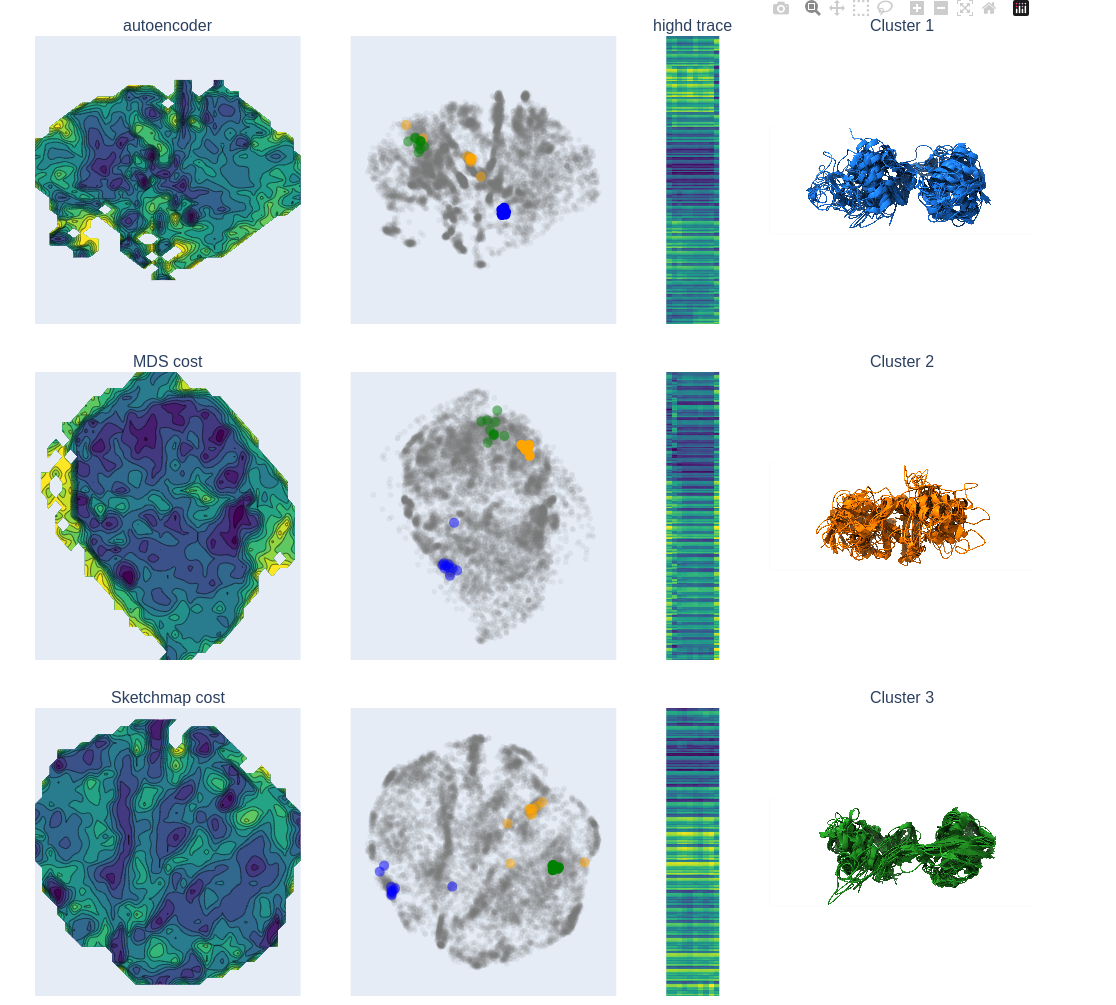

Notebook to create figure1 of the publication

Notebook to create figure2 of the publication

Notebook to create figure3 of the publication

Notebook to create figure5 of the publication

Starter Notebooks

MD Notebooks

- Working with trajectory ensembles

Customization Notebooks

- Customize EncoderMap: Logging Custom Scalars

- Customize EncoderMap: Custom loss functions

- Logging Custom Images

- Learning Rate Schedulers

Publication Notebooks

Static Code Examples